Zeren Chen1,2* Ziqin Wang1,3* Zhen Wang2* Huayang Liu2

Zhenfei Yin1,4 Si Liu3 Lu Sheng2† Wanli Ouyang1,4 Yu Qiao1 Jing Shao1†

1Shanghai AI Laboratory 2School of Software, Beihang University

3Institute of Artifical Intelligence, Beihang University 4University of Sydney

* Equal Contribution † Corresponding Author

Introduction

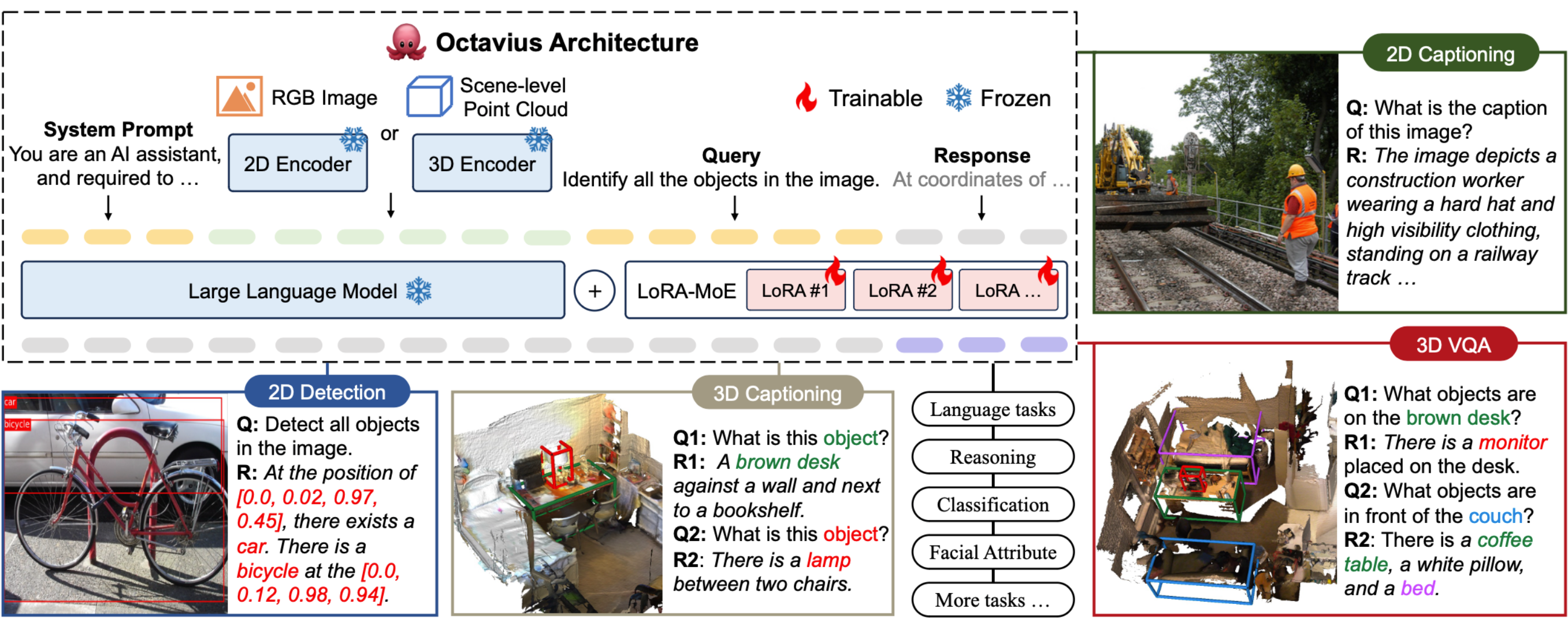

We propose Octavius, a unified, multimodal large language with a novel capability to comprehend various tasks across different modalities, including but not limited to 2D captioning, 2D detection, 3D VQA, and 3D dense captioning. Through combining well-known Mixture-of-Experts (MoE) and one of the representative PEFT techniques, i.e., LoRA, Octavius can efficiently be involved in more downstream tasks and more modalities by learning more LoRA modules, alleviating the potential task interference issuse arise from multimodal learning.

Usage

Environment installation.

Prepare the instruction / benchmark dataset and required pretrained weights for LLMs and visual encoder.

Training scripts:

Image modality only

cd src sh tools/Octavius/train_octavius_slurm.sh <YOUR_PARTITION> <NUM_GPU> \ config/Octavius/octavius_2d_e4_bs64.yaml octavius_2d_e4_bs64Point cloud modality only

cd src sh tools/Octavius/train_octavius_slurm.sh <YOUR_PARTITION> <NUM_GPU> \ config/Octavius/octavius_3d_e3_bs64.yaml octavius_3d_e3_bs64Image & point cloud modality joint

cd src sh tools/Octavius/train_octavius_slurm.sh <YOUR_PARTITION> <NUM_GPU> \ config/Octavius/octavius_2d+3d_e6_bs64.yaml octavius_2d +3d_e6_bs64

We provide pretrained Octavius model here.

Evaluation

We use ChEF to evaluate Octavius on both image and point cloud modalities, see here for details.

Citation

@misc{chen2023octavius,

title={Octavius: Mitigating Task Interference in MLLMs via MoE},

author={Zeren Chen and Ziqin Wang and Zhen Wang and Huayang Liu and Zhenfei Yin and Si Liu and Lu Sheng and Wanli Ouyang and Yu Qiao and Jing Shao},

year={2023},

eprint={2311.02684},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

License

The project is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes.